To review this article, select My Profile and then View Saved Stories.

Victoria Elliott

As AI teams become more complicated and accessible, so does one of their worst applications: non-consensual deepfake pornography. While much of this content is hosted on compromised sites, it is increasingly being discovered on social platforms. Today, the Meta Oversight Board announced that it is handling cases that may require the company to know how it handles deepfake pornography.

The council, which is an independent framework capable of issuing binding decisions and recommendations to Meta, will focus on two cases of deepfake pornography, which involve celebrities whose photographs have been altered to create particular content. In a case involving an anonymous American celebrity, deepfake porn depicting celebrities was removed from Facebook after it had already been reported elsewhere on the platform. The post was also added to Meta’s Media Matching Service Bank, an automated formula that searches for and removes photos already flagged as violating Meta’s policies, in order to keep them off the platform.

In the other case, a fake symbol of an anonymous Indian celebrity remained on Instagram, even after users reported it for violating Meta’s policies. The Indian celebrity’s deepfaux was removed once the board took up the case, according to the announcement.

In any case, the photographs were removed for violating Meta’s bullying and harassment policies, and were not included in Meta’s pornography policies. However, Meta prohibits “content that depicts, threatens, or promotes sexual violence, sexual assault, or sexual exploitation. “” and does not allow pornographic or sexually particular classified ads on its platforms. In a blog post published alongside the announcement of the cases, Meta said it got rid of the posts for violating the “photoshops or derogatory sexualized drawings” portion of its bullying and harassment policy, and that it also “determined that it violated [Meta’s] policy on adult nudity and sexual activity. “

The board hopes to use those instances to review Meta’s policies and systems to detect and remove non-consensual deepfake pornography, according to Julie Owono, a member of the Oversight Board. “I can already say, tentatively, that the biggest challenge is probably tripping over the onion. “”Chopped onion is the best or at least as effective as we’d like,” he says.

Meta has also long been criticized for its strategy to moderate content outside the United States and Western Europe. In this case, the board has already expressed concern that the American celebrity and Indian celebrity have gained another treatment as a reaction to their deepfakes. appearing on the platform.

“We know that Meta is faster and more effective at moderating content in some markets and languages than others. When taking a case in the U. S. “Between the U. S. and another in India, we need to see if Meta protects all women around the world equally,” says Helle Thorning-Schmidt, co-chair of the Supervisory Board. “It is critical that this factor be resolved, and the Council looks forward to determining whether Meta’s compliance policies and practices are effective in addressing this factor. “

Emilie Peck

Scott Gilbertson

Alistair Charlton

Cristóbal Null

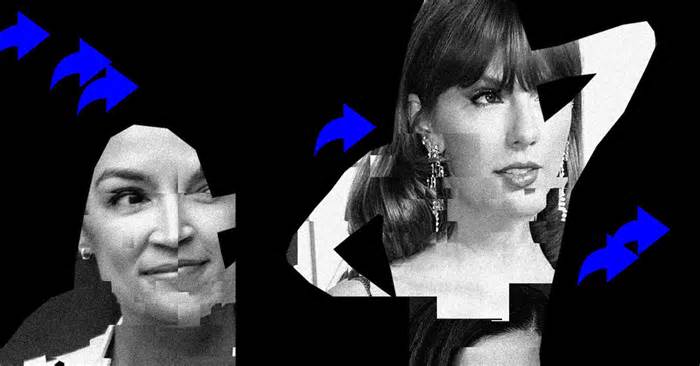

The committee declined to call out the Indian and American celebrities whose photographs sparked the complaints, but pornographic deepfakes of celebrities have become commonplace. A recent investigation by Channel Four revealed deepfakes involving more than four thousand celebrities. In January, a non-consensual deepfake of Taylor Swift went viral on Facebook, Instagram, and especially X, where one post garnered more than forty-five million views. X to limit the singer’s call from his search function, messages continued to circulate. And while platforms have struggled to remove this content, it’s Swift enthusiasts who have allegedly started reporting and blocking accounts sharing the image. In March, NBC News reported that classified ads for a deepfake app running on Facebook and Instagram showed photographs of a nude, underage Jenna Ortega. In India, deepfakes have targeted top Bollywood actresses, adding Priyanka Chopra Jonas, Alia Bhatt, and Rashmika Mandann.

Since deepfakes emerged a decade ago, studies have shown that non-consensual deepfake is more commonly targeted at women, and it has continued to rise. Last year, a report via WIRED found that 244,625 videos were uploaded to the top 35 deepfake sites. hosting sites, more than in any past year. And it doesn’t take much to create a deepfake. In 2019, VICE found that just 15 seconds of an Instagram story was enough to create a trustworthy deepfake, and the generation only has more accessible. Last month, a Beverly Hills school expelled five students who had performed non-consensual deepfakes on 16 of their classmates.

“Deepfake pornography is a growing cause of gender-based online harassment and is used to target, silence and intimidate women online and offline,” says Thorning-Schmidt. “Several studies show that deepfake is basically aimed at women. This content can be incredibly destructive to victims, and the equipment used to create it is complicated and accessible.

In January, lawmakers introduced the Disrupt Explicit Forged Images and Non-Consensual Edits, or DEFIANCE Act, which would allow others whose photographs were used in deepfake pornography to sue if they can display them without their consent. Congresswoman Alexandria Ocasio-Cortez, who sponsored the bill, and herself was targeted by deepfake pornography earlier this year.

“Victims of non-consensual pornographic deepfakes have waited too long for federal law to hold perpetrators accountable,” Ocasio-Cortez said in an article at the time. “As deepfakes become less difficult to access and create (96% of deepfake videos circulating online are non-consensual pornography), Congress will need to act to show patients that they won’t be left behind. “

Explore election season with our Politics Lab podcast

Do you think Google’s “Incognito Mode” protects your privacy?Think again

Reporting Sexual Harassment and Assault in Antarctica

The earth will dine with cicadas.

Update your Mac? Here’s what you’ll spend your money on

More from WIRING

Reviews & Guides

© 2024 Condé Nast. All rights reserved. WIRED would possibly earn a portion of sales of products purchased through our site as part of our partner component partnerships with retailers. Curtains on this site may not be reproduced, distributed, transmitted, cached, or otherwise used unless with the prior written permission of Condé Nast. Ad Choices